Why is Rust more reliable than C++ and even garbage-collected languages when it comes to memory safety?

Exploring what memory safety is and the unique way Rust reduces these errors without relying on any Garbage Collector.

People, like myself, who come from memory-managed programming languages such as Python, Java, Ruby, Haskell, and others, often wonder what memory safety is. In managed memory languages, programmers lack direct control over the memory used by these languages, unless they tinker with their runtimes. There are advantages and disadvantages to letting the runtime handle memories versus letting the programmer manage the memory.

A snippet from PROSSIMO:

Memory safety is a property of certain programming languages that prevents programmers from introducing certain types of bugs related to how memory is used. Since memory safety bugs are often security issues, languages that are memory-safe are more secure than those that are not.

Why is memory safety such a significant concern?

70% of the vulnerabilities that Microsoft assigns a CVE (Common Vulnerability Exposures) each year continue to be memory safety issues. --Microsoft Security Response Centre

Approximately 70% of our high-severity security bugs are related to memory unsafety (that is, errors with C/C++ pointers). Half of these are use-after-free bugs. Source

Memory safety problems occur because of how C/C++ handle memory. Raw pointers are the root cause of most memory safety violations in various vulnerabilities. As the name suggests, raw pointers provide no information other than pointing to memory locations. The ownership and lifetime of a pointer are not controlled by the compiler but by the programmer. Raw pointers are too unrestricted in the sense that they allow us to perform any action on them, whether safe or not. This characteristic can be useful for directly interacting with hardware, which is inherently unsafe but becomes safer through abstractions like the kernel. However, not all tasks need to have direct control over raw pointers. This is the reason why modern C++ supports different safe abstractions on top of raw pointers to avoid direct access to raw pointers.

Pointers are extensively used in C/C++ for various purposes:

Manually managing memory,

Creating references to data without copying the entire data(very efficient),

Representing nothing (wild pointers),

Manipulating computer memory and among other uses.

However, these pointers are easily misused, and the compiler won't raise objections about it. In contrast, Rust avoids using raw pointers for these tasks and provides safe abstractions around them, making misuse much less likely. Memory allocations and pointers (references) are more ergonomic to use in Rust compared to C/C++, and more importantly, they are memory-safe.

Unlike C/C++, Rust does not have explicit keywords like new, delete, malloc, or free for heap memory allocation and deallocation. The allocation and deallocation of memory in Rust are automated by the compiler using Ownership and borrowing abstraction at compile time. Rust provides an abstraction for heap memory allocation for dynamic data structures such as Vector and HashMap.

(Note: We can call drop to explicitly clean the memory but can't be called more than once. There is a type in the standard library that doesn't call the destructor.)

Rust's types not only offer type safety but also encode memory safety as types. Advances in type theories have contributed to statically mitigating memory-related errors through type systems, without relying on runtime checks. However, this is also why Rust has a steep learning curve in the beginning because memory safeties are similar to how you get a type error, such as adding a string to an integer at compile time in Java or C++, or at runtime in other programming languages.

Now, let's delve into how the Rust type system prevents memory-related errors at compile time. It's worth noting that Rust's type system provides benefits beyond memory safety alone.

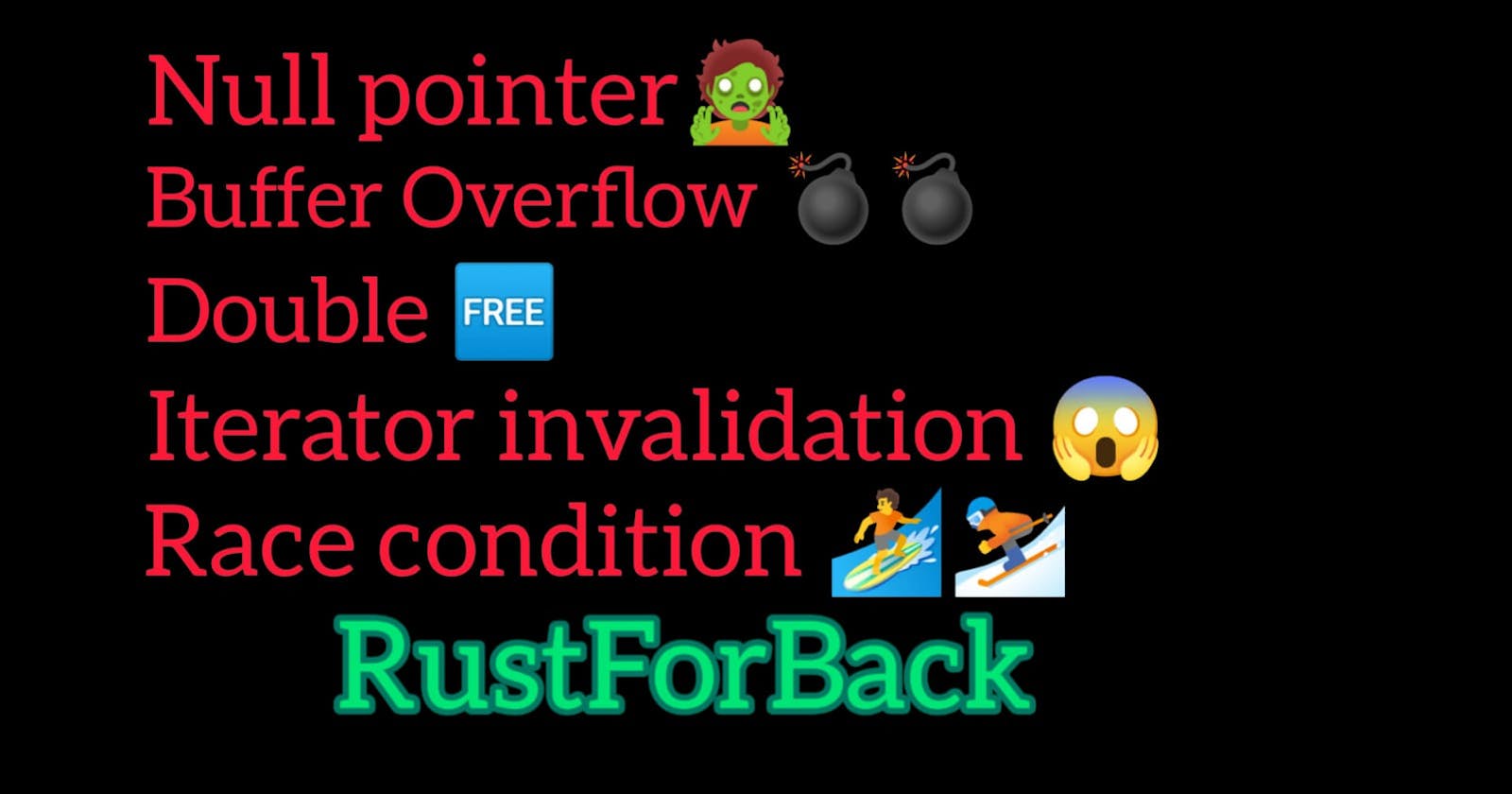

Memory Violation Bugs

Using uninitialized values

Null pointers

Buffer over-read and over-write

Integer overflow

Use after free

Double free

Memory leak

The errors mentioned above are mitigated by memory-safe languages that use Garbage Collectors to prevent them from occurring either at compile time or runtime. However, not all bugs are prevented by them. What's unique about Rust is that these errors are ruled out at compile time, except for overflow, which is determined at runtime. This is a crucial distinction that sets Rust apart from garbage-collected languages like Python, Java, and even C/C++.

You don't need to worry about Rust syntax for now. You can use this link to execute the code yourself in the browser. just copy the specific code then paste it there to execute to see what errors you get.

Uninitialized Variables

If you declare a variable, it remains uninitialized until you initialize it. An uninitialized variable contains random values or might even contain sensitive data. Garbage-collected languages use control flow analysis to prevent the use of uninitialized variables before using them. In Rust, a variable must be initialized to be used at all. This rule applies to struct and enum constructors as well.

fn main() {

let m: i32;

// m = 10; // Uncommenting this line will initialize the variable

println!("{}", m);

}

In this case, a type annotation is necessary because Rust won't infer the type of the variable without some initial value to infer the type. Running the above code will result in a compilation error. It is important to get immediate feedback at compile time rather than during runtime.

In C, this kind of error is the norm. When you create a structure in C and use malloc to allocate size, it will return either a pointer to the struct or null if the allocation fails. If null is returned, printing that will also be a bug. Even if the allocation is successful, accessing the struct data without initializing it first is a memory error. C is such a dangerous yet very efficient programming language.

Null Pointers

Null pointers are pointers that point to a memory location that doesn't have any type information to make sense of, and the invalid use of a null pointer can lead to crashes or unexpected behavior. They are often used to represent the absence of a value, such as when searching for an element in a hash map or used in fallible operations as a return type to inform the programmer of errors. Forgetting to handle the case where a value might be absent can result in crashes or runtime exceptions in languages like C++, Java, or Python. If you experience an application crash in Android, this might be the reason why.

Rust takes a different approach to tackle this problem. It doesn't have null pointers like C++ or the concept of null as in Java or Python. Instead, Rust mitigates null pointer issues through its type system. Rust uses an enum type, Option<T>, to encode the presence or absence of a value. This eliminates the possibility of a null pointer dereference.

enum Option<T> {

Some(T),

None,

}

The Option enum has two variants: Some, which wraps a value, and None, which represents the absence of a value. These are not pointers or references but a type, so attempting to dereference them results in a compilation error. The Rust compiler ensures that we handle both cases before accessing the data, making it impossible to accidentally forget to handle the absence of a value.

fn main() {

let m = vec![1, 2, 3, 4, 5];

// Returns `None` if the index is out of bounds

println!("{:?}", m.get(5));

// println!("{:?}", m[5]); // This would cause a panic, similar to a runtime error in Python

let some_or_none = Some(5);

match some_or_none {

Some(val) => {

let add_one = val + 1;

println!("{}", add_one)

}

None => println!("Value is absent"),

}

}

Buffer Overflow(Off-by one error)

Accessing data beyond the length of a collection, whether it's stored on the stack, heap, or in static memory, results in a buffer overflow, a memory error. This leads to a security vulnerability, as we're reading or writing memory that isn't part of our intended data. Let's attempt to access elements from both stack-allocated and heap-allocated data in Rust. If you're curious about what the stack and heap are, you can find more information here. By default, collections are bound-checked at runtime. But sometimes bound checks are avoided by the compiler only if it's safe to do so.

fn main() {

// Array

let stack_data = [0, 1, 2, 3, 4];

// Growable vector

let heap_data = vec![0, 1, 2, 3, 4];

// The length of the array is 5 and it's zero-indexed.

let invalid_stack_data = stack_data[5];

println!("{}", invalid_stack_data);

let invalid_heap_data = heap_data[5];

println!("{}", invalid_heap_data);

}

The above code results in a compiler error in debug mode but panics at runtime. In Rust, panicking means the program halts immediately instead of continuing with incorrect data while running. Buffer overflow is an example of a spatial memory error.

Integer Overflow

Integer overflow is similar to buffer overflow. Integers have limitations on the number of values they can represent, which depends on the number of bits used for their representation. There are two main types of integers: signed and unsigned. Signed integers, which include negative numbers, can represent values within the range of

-2 ^ (n-1) to 2 ^ (n-1) -1, where n is the number of bits. On the other hand, unsigned integers can represent values from 0 to 2 ^ (n-1) For example, 8-bit signed integers can represent values from -128 to 127, while unsigned integers cover a larger range of values, starting from zero. In the Rust standard library, both signed and unsigned have constants for the minimum and maximum values of that integer representation.

fn main(){

let signed_8bit_min = i8::MIN;

let signed_8bit_max = i8::MAX;

let unsigned_64bit_min = u64::MIN;

let unsigned_64bit_max = u64::MAX;

// Only 8, 16, 32, 64, 128 bits are available for integers.

println!("{}", signed_8bit_max + 1);

}

Adding or subtracting one from the maximum or minimum values of any integer's bits results in overflow. In debug mode, Rust compiles with an error message like below.

error: this arithmetic operation will overflow

--> src/main.rs:7:20

|

7 | println!("{}", signed_8bit_max + 1);

| ^^^^^^^^^^^^^^^^^^^

attempt to compute `i8::MAX + 1_i8`, which would overflow

|

= note: `#[deny(arithmetic_overflow)]` on by default

But in release mode, apply a wrapping mechanism, i.e., wrap to their minimum value. So, put some effort into determining which type to use and estimate the maximum values you need to represent beforehand.

If you are familiar with Python or Java, you may have observed that there are multiple integer types instead of a single integer for all use cases.

Notes:

Debug Mode is used when building our program during development, using cargo build to reduce build time. Release mode is employed when we desire our binary to be optimized, a process that takes more time than debug mode.

Use After Free:

This bug occurs when accessing heap-allocated or dynamically allocated data after it has been freed or released to the operating system. In C/C++, accessing values after freeing memory causes segfaults. However, in Rust, it results in a compile error. This is considered a bug because once heap memory is released to the operating system, it becomes uninitialized or may be used by another program. Trying to access freed memory crashes the system, leading to segfaults in C/C++. Use-after-free isn't just limited to heap data. It's also possible to use the stack after it has been popped when a function call exits. Rust doesn't compile code with these errors.

fn main(){

let owned_type = String::from("Rustacean");

move_(owned_type);

// Here we attempt to access 'owned', which is freed when the 'call' scope ends.

println!("{}", owned_type);

}

fn move_(s: String){

println!("{}",s);

} // The String s is freed here

If the type is Ti.e owner, it will be automatically cleaned up at the end of the scope. Due to Rust's single ownership restriction, when s is passed to the call function, the function takes ownership. Therefore, the function is responsible for cleaning up the memory. However, attempting to access it again after the call to the call function in the main function is forbidden by the Rust compiler, preventing a disaster later on.

T - A owned data.

&mut T and &T - A borrowed or non owned data.

Dangling Pointer

Dangling pointer is a memory error in that accessing de allocated memory after it has been freed cause security vulnerability. When we represent nothing using Null pointer in C++ it's a dangling pointer without initializing the value before using it.

Example 1

let string = String::from("Dynamic String");

let reference = &string;

//Explicitly freeing the memory

drop(string);

//Mutable access

string.push_str("pushing more elements");

println!("{}", reference);

After cleaning or dropping the string, we try to push more elements into it. If it's allowed, we are pushing the elements into memory that no longer belong to the variable string. It might be in another program's memory. Accessing the reference to that string after it's freed or moved contains random data, just like uninitialized data.

Example 2

let mut vector = vec![0,1,2,3];

let reference = &vector[0];

vector.push(4);

println!("{}",reference);

Why pushing elements onto the vector in the third line has anything to do with the reference of that vector in the second line? A vector is a dynamic collection that grows or shrinks, unlike a stack or static array where they are fixed. Either operation can cause a memory error. When we push an element to the vector in the third line, it might allocate more space to store the new element. If the capacity exceeds, the vector points to a different pointer (new location) than the previous pointer (old location). This causes the reference stored in the variable reference to become invalid. Of course, Rust doesn't allow this, and this is one example of a temporal memory error, i.e., accessing memory out of order causing a memory error.

Android utilizes Hardware Address Sanitizer (HWASan) to mitigate Use-After-Free (UAF) and Dangling Pointers using Tag Byte Ignore (TBI) provided by the hardware. However, this approach is non-deterministic, implying that there's a possibility of accessing memory after it's been deallocated if the tag coincidentally matches when accessed again. On the other hand, Rust effectively prevents these issues without necessitating hardware protection or introducing runtime memory overhead. Rust's approach is truly remarkable, considering the substantial effort often required to guard against such memory errors in C++. Unlike HWASan, Rust ruled out these bugs during compile time.

Example 3

let nothing:Option<String> = None;

It's just one line but saves us from a billion-dollar mistake that C++ makes easier to produce. The type information is needed here because without type information, Rust can't predict the type of the Some value. Without pattern matching, we can't access the data inside it. Here, None is a variant of the Option enum, which is a generic enumeration type, allowing us to use it with any type we want.

Double Free

The double-free error occurs when memory that has already been freed is attempted to be freed again. For instance, invoking free() twice in C or delete() twice in C++. But why might someone want to free memory twice? In extensive codebases, programmers can inadvertently release memory twice by accident because there is no structure in it; i.e., when we create and where we delete is not restricted. That's why using unique or shared pointers solves this issue by restricting it to the scope. This leads to program crashes due to segmentation faults.

Upon the initial call to free, the memory data is cleared, and when a subsequent program seeks memory from the operating system allocator, the data at that location is overwritten. Triggering the second free on the same data might potentially cause another program to crash. Additionally, besides causing crashes, it might expose private data if the freed memory was used to store the private data.

Rust prevents double-free errors through its single ownership rule. Each variable has precisely one owner, excluding smart pointer types. If a movable type is assigned to a new variable, the new variable becomes the owner, rendering the old one invalid.

fn main() {

let old_owner = String::from("Hello, Fellows");

let new_owner = old_owner;

println!("{}-{}", old_owner, new_owner);

}

In the above code, the heap-allocated data is moved when assigned to the new variable new_owner instead of copying the data, as in C++. Due to the single ownership rule, only new_owner is cleaned at the end of the scope. Additionally, Rust forbids the use of uninitialized data of old_owner in the third line since, once the data is moved, it becomes uninitialized, as in the case of old_owner in this example.

fn main() {

let m = vec![1, 2, 3, 5];

drop(m); // Explicitly freed once.

drop(m); // Attempt to free memory twice. Rust's compiler complies with the 'Use of moved value' error.

}

Drop is Rust's equivalent of free or delete in C++, but it's just a trait with the name Drop that moves the data inside the Drop function and is cleaned at the end of the scope. The Rust compiler automatically invokes the Drop trait's implementation when the owner goes out of scope. Again, due to the single ownership rule, double free errors are ruled out at compile time. Such bugs typically do not occur in garbage-collected languages, as the garbage collector cleans memory only when there are no references to the object.

The scope refers to the region surrounded by curly braces. Yes, curly braces encompass more than just block code in Rust; thus, where the data is initialized and where it is used differs.

Memory Leak

If a program allocates memory and never frees it, either due to forgetting to clean it or a logic error, it will run out of memory—more like a denial of memory.

In C/C++, failing to release memory back to the operating system when it's no longer required can result in memory leaks, i.e., forgetting to call free at the point where the memory is no longer needed. Manual memory management can be efficient, allowing you to allocate larger memory chunks and then deallocate them at specific points in the code. This approach enables memory to be reused for other tasks, preventing excessive consumption of system memory. It is also the reason why garbage-collected languages are not used to develop system software like operating systems and browsers.

However, keeping memory longer than necessary can prevent other programs from utilizing that memory.

Note that memory leaks are not specific to C++. They can occur due to logic errors, such as an infinite loop, in any programming language. It's just that it's more likely to happen in C because of forgetting to clean the memory manually. This is the reason why C++ has smart pointers, a type to clean the memory automatically at the end of the scope, following the RAII (Resource Acquisition Is Initialization) pattern.

Because of Rust's memory restrictions, memory leaks are less likely to happen since each data is cleaned at the end of the scope. However, sometimes resources shouldn't be kept until the end of the scope, such as locks on a mutex.

Consider the following code, which appears to have forgotten to close the file, potentially causing memory leaks and incorrect memory access if another part of the program tries to access the same file.

use std::fs::File;

use std::io::prelude::*;

fn main() {

create_file();

}

fn create_file() -> std::io::Result<()> {

let mut file = File::open("text.txt")?;

file.write(b"There is no need to close the file explicitly as there is no close method. \

The file closes implicitly when the scope ends.")?;

Ok(())

}

In this case, there are no memory leaks. Rust employs scoped management to release memory and other resources when they go out of scope. This is how Rust manages resources like sockets, locks, and files. There is difference between resources and memory. Both of them managed deterministically in Rust.

Garbage collectors only address memory errors, not other resources such as TCP listeners or opening a file. In Python, the with statement is used to release resources when the scope ends. Otherwise, the resource is retained until the program terminates. Other languages offer similar mechanisms for handling resources beyond memory. Read the Rust Means Never Having to Close a Socket post to know more.

However, there are situations where we might be intentionally to leak memory, and Rust provides this capability through Box::leak.

We will see in coming post about error handling in Rust,the ? operator is an ergonomic way to handle failures in Rust. On success, it returns nothing (i.e., ()), but on failure, it immediately returns an error, without proceeding further.

Iterator Invalidation

Iterator invalidation is a bug that arises when concurrent access to the same data leads to subtle errors in C++, Java, or Go. This can even occur in single-threaded code, i.e., no threads were involved. Suppose you create a function that adds elements to the target vector from the source vector. What happens if both the source and target vectors are the same? The mutator method on the data structure pushes more elements to it while iterating over them. The issue lies in the fact that, in C++, when the current capacity is exceeded, the vector allocates more space and relocates those elements to a new location. This can result in various memory errors, including writing data to memory that belongs to another program.

In Python, this situation leads to an infinite loop. Java throws a runtime ConcurrentModificationException error. Both languages don't have a mechanism to prevent this from happening. At least in Java, it's a runtime error, but in C++, the outcome is unpredictable if we can only see through the abstraction..

However, Rust does not allow such scenarios to occur at all. In Rust, we have granular control over the mutability of data. It's like giving permission or capabilities to the data when declaring, which is very helpful for reasoning about code later.

fn main() {

let mut source_vector = vec![1, 2, 3];

let mut target_vector = vec![1, 23, 3];

copy_element_from_source_to_target(&mut source_vector, &mut target_vector);

//copy_element_from_source_to_target(&mut source_vector, &mut source_vector);

//copy_element_from_source_to_target(&mut target_vector, &mut target_vector);

}

fn copy_element_from_source_to_target(source: &mut Vec<usize>, target: &mut Vec<usize>) {

for element in source.iter() {

target.push(*element);

}

}

Handling iterator invalidation is not a trivial task in Rust. Below are possible adjustments that need to be made in order to reproduce iterator invalidation or concurrent access (without involving threads).

We need to make the source vector mutable to perform a mutator method, such as pushing more elements, even though it's not required in this case.

We need to make the target vector mutable. Otherwise, we won't be able to mutate the vector at all.

The above code attempts to add elements from the source vector to the target vector and compiles successfully. However, if we pass the same vector to the function and uncomment the second or third function call, the compiler raises an error, stating:

cannot borrow

vas immutable because it is also borrowed as mutable.

Remarkably, Rust is able to detect this just by looking at the function signature. Even if we remove the for loop inside the function body, the code still produces a compilation error. Rust has a restriction that allows either one mutable reference (write) to the object or multiple immutable references (read) at a time.

Why is this a bug? There are two possible outcomes if such a program is allowed to run: either the loop runs infinitely, using memory continuously as a side effect because each time the loop iterates, it increases the length of the vector (which becomes infinite due to the continuous addition of elements), or the program crashes. Pushing more elements to the vector increases its capacity, leading to memory allocation from the operating system and data relocation, causing a use-after-free (UAF) memory error again.

This problem is not specific to iterators. It occurs whenever there is simultaneous mutable and immutable access to the same data. Rust makes this distinction transparent due to distinct signatures for mutating and non-mutating access.

You can try running this Python code in your editor or an online Python interpreter. It won't terminate without user intervention. Rust's behavior is different, with an infinite loop not manifesting in the same way

data = [1, 2, 4]

for i in data: # reads the data, iterating

data.append(i) # after this line, the list's length increases, leading to another loop iteration

What about static data or non-growable data? Guess what? Rust doesn't have a mutator method (an operation that grows the elements) that increases the capacity of the array but only allows in-place modification.

Concurrency Bugs

Imagine a classroom where everyone is copying math notes from the blackboard. Suddenly, someone finishes copying the notes and erases them from the board while everyone else is still copying. What are some possible scenarios?

They will completely erase the notes, leaving a new person who starts copying completely lost.

They will partially erase the notes. However, the person is kind of weird. Either they erase the bottom notes, affecting whoever finished the top notes, or they erase the top notes, affecting whoever starts new and will never get the bottom notes. (Don't worry, one of your friends might fully copy it.)

This is what happens when more than one thread is involved. Concurrency bugs are related to memory issues that arise without proper synchronization between memory accesses when data is shared between threads. These bugs occur due to race conditions, where multiple threads access the same memory concurrently. Writing concurrent programs can be challenging. Functional programming languages like Haskell, Erlang, Elixir, and imperative programming languages like Go offer better abstractions for writing concurrent code, reducing the need for extensive experience. However, their APIs can still be misused, and often it is advisable to avoid shared mutability altogether.

Rust type system force you to use synchronization mechanisms before sharing shared memory among threads. Thanks to its restrictions on Aliasing and Mutation, Rust allows you to write low-level concurrency code similar to that in C/C++, but with greater safety and guarantees. In the code snippet below, it's impossible to access the data within the mutex without first locking it, ensuring exclusive access. This approach tightly binds the data and permission. Note that the example provided is for single-threaded code,

use std::sync::Mutex;

fn main() {

let sync_type = Mutex::new(45);

*sync_type += 10;

let temp = sync_type.lock();

//let mut temp = sync_type.lock().unwrap();

*temp += 10;

}

How Rust Protects Us from Accessing Data Inside Mutex:

1) In the second line, attempting to access the data directly inside the Mutex results in a compile error, forcing us to use the lock method on the mutex. The first disaster is averted.

2) The lock method returns the Result type, indicating that the operation is fallible. Without handling errors, we cannot access the data.

3) Without making the temporary variable mutable, we can't mutate the data. Rust only allows reading in this case.

But where do we unlock the mutex? We don't need to explicitly unlock it. When the scope ends, the lock is automatically released. The same principle applies to automatically closing the file in the above example. In just a couple of lines of code, we can see how Rust prevents us from compiling code that doesn't use APIs correctly. Rust supports more than the shared memory model of concurrency.

However, memory-safe languages like Rust don't make programs immune to all bugs. While they significantly reduce certain memory-related bugs, it's still best practice to thoroughly test and review code for other potential issues, such as deadlocks and logic errors.

Here's a link to a real-world example of data races on a GitHub User Session. This session is developed using the Ruby programming language. This bug is unlikely to occur in safe Rust because the compiler refuses to compile code with this race condition. It's important to note that Rust prevents us from introducing race conditions in the code, significantly reducing the occurrence of hard-to-debug issues in production systems.

Using memory-safe languages eliminates certain memory-related bugs occur in the first place. But when writing performance-critical software, the choices include C/C++, which aren't inherently safe.

Reasons Managed Languages Aren't Used Where C/C++ Are, Besides Runtime Performance:

Garbage-collected (GC) languages rarely interoperate with each other. They abstract the hardware from the programmer's perspective, preventing access to hardware features like AVX or SSE SIMD extensions unless the language runtime implements them. Optimizing memory usage also requires accessing the runtime, which is free in C++/Rust, where the compiler can extract a wealth of type information from the program and the backend uses that to optimize for hardware features. Rust strikes a balance between providing high-level abstractions and memory safety, similar to GCed languages, and offering low-level control like C++ without heavyweight runtimes.

The image is sourced from the YouTube video by Niko Matsakis

Google chose Rust to write new native code for their Android kernel, and it's also used in the Fuchsia operating system. Apple introduced Swift as an alternative language to Objective-C, providing a memory-safe language for their platforms (though open source), with modern language features and a deterministic approach to memory management. Possimo is an ISRG project that aims to rewrite critical Internet software components in a memory-safe language, Rust. Servo is an open-source experimental browser engine written in Rust from scratch, designed to take advantage of multicore processors while avoiding mistakes that could occur if it were written in C++.

Reference

Fearless concurrency with rust

Microsoft chooses Rust for writing new code

Why Memory safety is important

Fearless Security: Memory Safety

C++ Rules to follow in order to doesn't cause undefined behavior at runtime. In the case of Rust, the compiler has your back. Bugs are caught by the compiler as long as we don't use unsafe explicitly without careful review.